Tacit expertise is your advantage. That’s because AI scales what’s documented. If your secret sauce lives in heads, not docs, AI will scale your competitor’s average instead of your edge. Document tacit knowledge to capture the judgment calls that make your frameworks work. Then use AI to scale what’s true.

I’ve been following Rick Beato for years.

For those unfamiliar, Rick hosts an excellent YouTube channel for music professionals, amateurs, and geeks like me.

Being a musician and a marketer, Rick’s insights ring loud and clear with me when it comes to AI adoption.

In the video below, Rick said:

“A lot of musicians talk to me and they’re really freaking out about these [AI] programs.”

A lot of GTM teams are freaking out too.

You don’t need to be a musician to understand what Rick’s getting at. His real-world examples are not mutually exclusive to the music industry.

You may not know Max Martin, but you probably know the dozens of number-one songs he’s written or produced for Taylor Swift, The Weeknd, Ariana Grande, the list goes on.

He’s given fewer than five public interviews in the past decade. When he has talked, he shares high-level principles:

But the thousands of micro-decisions he makes in the studio? Those aren’t documented.

How does he know when to rewrite a pre-chorus for the 47th time? What makes him choose one word over another for sound versus meaning? When does he stop?

That level of expertise (the tacit, moment-to-moment judgment) isn’t captured.

Same with Serban Ghenea, who’s mixed most major pop hits of the past 25 years. He’s given maybe two interviews in twenty years. When he does talk, he says things like, “There’s no bag of tricks. The song dictates the tools.”

Rick’s point: Even when top practitioners share something, they don’t share the real how, the accumulated judgment that makes their work different.

That’s the nuance. That’s human.

And it’s not because you don’t have ANY documentation.

You probably have countless playbooks, specs, and guides.

But the micro-moves that separate your best performers from everyone else? Those aren’t captured.

That’s your Max Martin Gap.

The judgment calls that make your frameworks work in YOUR context.

Rick tested this with a simple example:

Why? Different training data.

ChatGPT trained heavily on Wikipedia. The other models trained on YouTube, where the answer exists in a popular video.

Same question. Different models. Different training data. Different results.

(If you want to see Rick break this down with the 52 factorial test, including his 9yo son reciting it years ago, watch the full video.)

Now think about your GTM motion:

If that’s not written down, AI can’t learn it.

More importantly: your next hire can’t either.

Let’s say you ask ChatGPT to draft a sales email. Build a positioning doc. Forecast pipeline. Whatever.

It spits out something fluent and confident.

Your team thinks, “Good enough.”

You ship it.

Except it’s generic. You just spent time and money to sound like everyone else.

(Ironically, B2B Tech Marketing has been doing this without AI for years!)

The trap isn’t that AI gets it wrong (it “can make mistakes”).

The trap is that AI gets it average, and average feels good enough when you’re moving fast.

I’ve fallen into this trap. Everyone does. But once you see the patterns, you self-correct (hopefully).

I use AI every day (like even this article). Not to write for me, but to help me ideate, research, fact-check, and provide structure.

(I have ADHD so every bit of structure helps... haha!)

I also acknowledge that I use AI as an assistant at the bottom of every article.

Instead of feeding AI generic prompts, train the model on actual documented process.

The “real how” from your best performers can help you stand out and scale faster than those who rewrite other blog posts and summarize frameworks they never use.

The companies that will win with AI aren’t the ones with the best prompts. They’re the ones who documented what makes them different before they handed anything to a model.

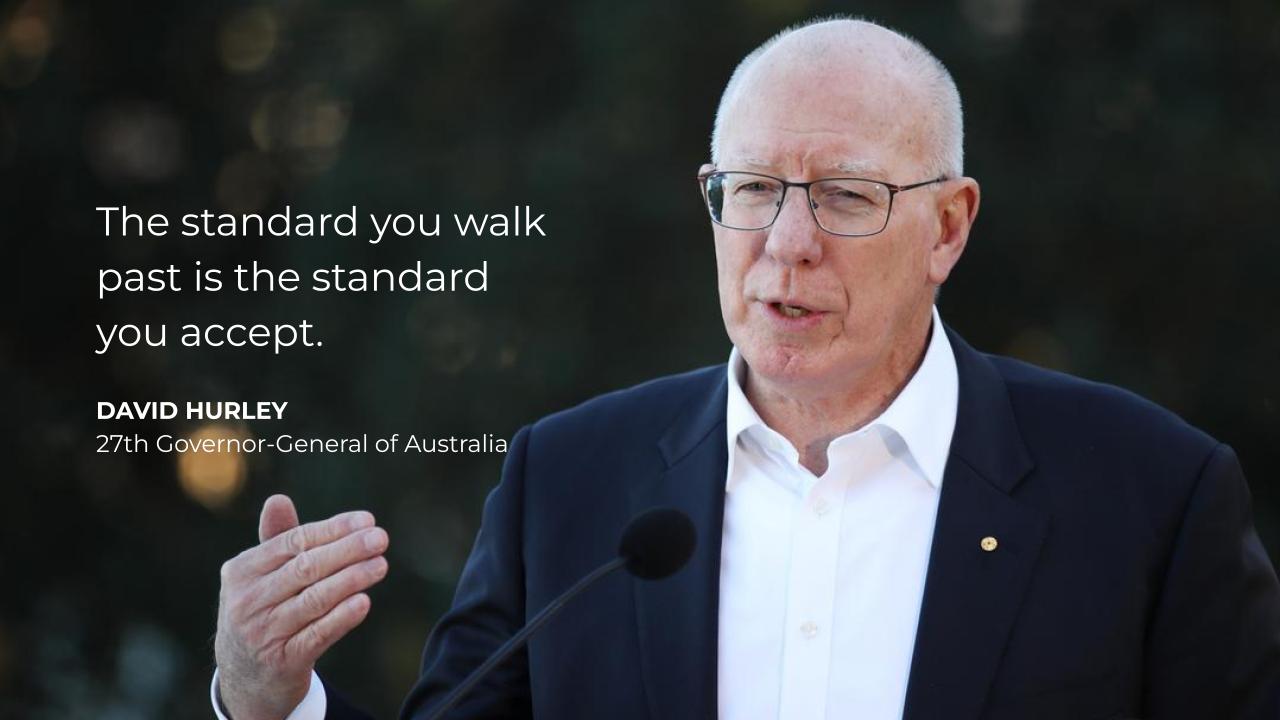

David Hurley said it best:

If we accept generic AI output because it’s fast, we’re accepting low-level standards... or no standards at all.

Rick’s video is a good reminder that the AI limitations in the music industry applies to any industry.

We already know how AI is replacing manual tasks (like every other major tech revolution).

But will it ever be able to replace the tacit nuance that comes from human creativity?

We don’t need to freak out.

We just need to be creative.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!