Positioning, Messaging, and Branding for B2B tech companies. Keep it simple. Keep it real.

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

During a recent (and timely) Lenny’s Podcast episode, Jason Lemkin, cofounder of SaaStr, dropped a few jaws and rolled some eyes (just read the comments) with this:

He used to run go-to-market with about 10 people. Now it’s 1.2 humans and 20 agents. He says the business runs about the same.

That’s a spicy headline.

It’s also the least useful part of the story.

If you’re trying to adopt AI in GTM without breaking trust, below are field notes worth considering.

Jason’s most useful advice is not to “immediately replace your GTM team.”

It’s the Incognito Test:

You will probably find something broken. Like maybe support takes too long. Maybe the contact “disappears” in the CRM ether. Maybe your SDRs take three days to respond (or never).

Pick the thing that makes you the most angry. That’s your first agent use case.

One workflow. One owner. One month of training. Daily QA.

Then decide if you need a second, a third, and so on.

This is a pattern Gerard and I keep seeing too.

The real question isn’t “What’s our AI strategy?” It’s “What’s the smallest thing I can do right now that actually helps?”

Source: Lenny’s Podcast, 01:25:29

Two salespeople quit on-site during SaaStr Annual. No, not after the event… during.

Jason Lemkin had been through this cycle before. Build a team, train them, watch them leave, rinse and repeat. This time, he made a different call:

“We’re done hiring humans in sales. We’re going all-in on agents.”

Fast-forward six months: SaaStr now runs on 1.2 humans and 20 AI agents. Same revenue as the old 10-person team.

Source: Lenny’s Podcast, 0:11:52

Jason said this about SaaStr’s “Chief AI Officer”, Amelia* (a real person):

“She spends about 20%* of her time managing and orchestrating the agents.”

Every day, agents write emails, qualify leads, set meetings. Every day, Amelia checks quality, fixes mistakes, tunes prompts.

This isn’t “set it and forget it.” It’s more like running a production line. A human checks it, fixes it, and tunes the system so it does not drift.

Agents work evenings. Weekends. Christmas.

But if nobody’s watching, the system decays… fast.

And that’s the part vendors don’t put in their demos.

* Amelia is the 0.2 human who spends 20% managing agents. Jason is the 1.0.

Jason’s breakthrough came from a generic support agent. It wasn’t built for sales. It wasn’t trained on sponsorships. But it closed a $70K deal on its own because it “knew” the product and responded instantly at 11 PM on a Saturday.

That’s why this conversation matters.

Not because an agent can write emails. But because one can close revenue if you give it enough context and keep it on rails.

Source: Lenny’s Podcast, 0:07:53

Jason’s experience lines up with what Gerard and I have also seen in the field.

Start with a real workflow, not an “AI strategy”

Speed does not fix the wrong thing

Adoption is a leadership job

Whenever new tech comes along, almost everybody immediately wants the potential upside.

Almost nobody wants the governance.

It reminds me of the Dotcom boom. Everyone wanted a website. No one wanted to manage the content ecosystem (that’s still true today).

Agentic AI, like what Jason implemented at SaaStr, is no different.

Customer-facing GTM output carries real risk for your brand reputation. If your AI agents hallucinate, spam, or misread context, you don’t just lose a meeting. You lose trust.

And that’s the simple truth we keep coming back to:

The constraint is not capability. It’s trust. And trust is earned, not bought (or a bot).

In our AI adoption challenges article, we said tool demos hide the messy middle: setup, access, QA, and the operational reality of keeping this stuff reliable.

Jason’s story doesn’t remove that mess. It confirms it. He just decided the mess was worth automating.

Watch the full conversation: Lenny's Podcast with Jason Lemkin

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

Last week, Mark Stouse and I wrapped a preview for a new 4-Part Causal CMO LinkedIn Live series running from January to March. The message is clear and urgent. The rules have already changed. Budgets will follow. So if you’re still defending GTM spend with correlation charts while deals continue to stall, 2026 is going to hurt.

Starting in January, Mark and I will cover what GTM teams need to unlearn and relearn in 2026.

Here’s the schedule:

Details for Episode 1 will be posted on LinkedIn in early January.

Now on to the recap.

Mark’s recent 5-Part Go-to-Market Effectiveness Report triggered over 4,200 DMs in two weeks. And his Causal AI Advisory firm, Proof Causal AI, has been inundated with an influx of inbound activity. Most are not coming from CMOs. And that is troubling.

“Between 80 and 85% of our inbounds are from Finance teams. The attention from Finance leaders and CEOs was off the charts. CMOs were in the minority.”

That tells you all you need to know about where GTM accountability is headed. Leadership no longer cares about MQLs and busy dashboards.

They care about:

Part of this shift is legal and governance.

Delaware oversight expectations and AI disclosure scrutiny have raised the bar on how leaders justify spend and data quality.

“This is going to be the storyline of 2026 and for sure 2027. In 2027, a lot of this is going to be far more visible and unpleasant.”

Deeper dive: We’ve already covered the Delaware Ruling, and Mark wrote a great piece on the B2B Governance Revolution.

Mark used the Gartner hype cycle lens to frame what's coming in 2026. When aspiration meets operating reality, you hit the trough of disillusionment.

“Reality is not open for discussion. It just is what it is.”

This echos something former GE CEO Jack Welch said over 30 years ago:

In GTM, that reality shows up as longer cycles, smaller deals, and more deals ending with no decision.

If your playbook still “works,” but outcomes don’t, there’s your Reality Gap.

Mark’s published work and recent coverage point to a long decline since 2018. In one of his recent MarTech articles, he cites effectiveness falling from 78% (2018) to 47% (2025) across 478 B2B companies.

And no, GTM teams didn’t suddenly get dumb.

“One out of every two dollars in go-to-market is waste today. Why? Because go-to-market teams have been ignoring externalities… the 70 to 80 percent of what causes anything to happen, which is the stuff we don’t control.”

While external forces got stronger and changed faster, most GTM teams kept optimizing internal metrics that do not predict revenue.

Founders and leaders should care about this more than anything else.

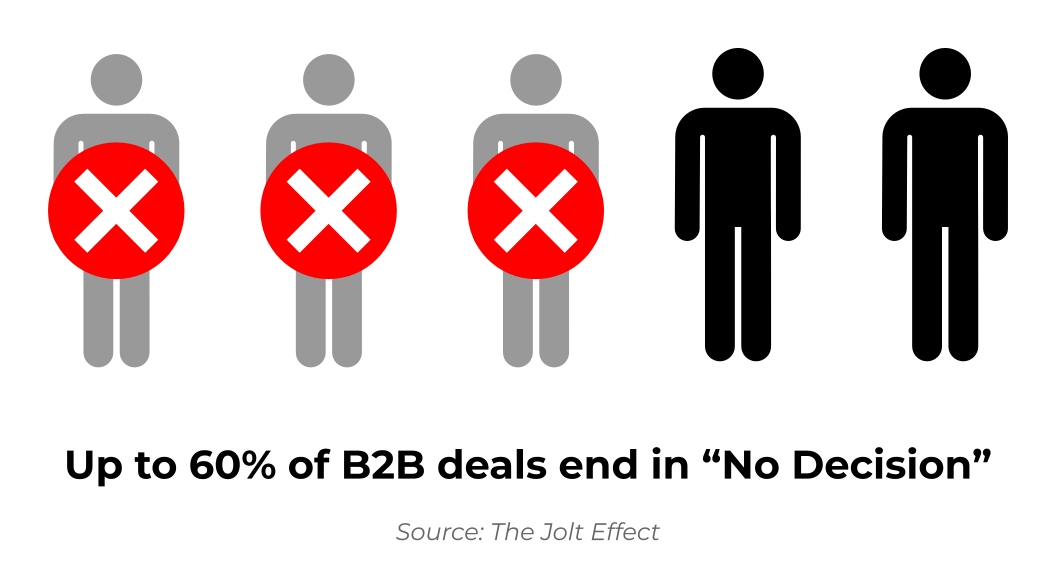

You can lose to a competitor and still learn something. But if you lose to indecision, you get nothing but sunk cost.

Matt Dixon and Ted McKenna, authors of The JOLT Effect, published a solid proof point: in a study of more than 2.5 million recorded sales conversations, 40% to 60% of deals ended in “no decision.”

And as Mark explains, the financial impact is sobering:

“If it’s taking your sales teams a lot longer to close smaller deals, and a lot of the deals are closing without any decision, then you have no offset revenue for CAC.”

So when GTM leaders defend spend with correlation, Finance hears: “We can’t explain why buyers aren’t deciding.”

After we went off air, Mark dropped the best nugget of the whole conversation:

“There has been a culture, a narrative control, that has been built for decades. And they are deeply concerned about any system that is beyond their control. And the whole thing becomes completely unsustainable.”

That explains a lot:

Causality does the opposite. It forces reality into the room. Including the parts you do not control.

And that’s terrifying for teams who’ve built careers on controlling the story.

Mark’s Tip: You can’t graft new reality onto old systems. You have to unlearn first.

Have a wonderful holiday. See you in January.

Missed the preview session? Watch it here

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

Vibe-coding gets hyped as a way to “build an app in minutes.” That’s not the useful part. The useful part is this: it lets you create disposable prototypes fast enough that teams can stop guessing and start reacting. It’s a clarity tool, not a shipping tool.

Many teams (engineering, product, GTM) still work like this:

Write a doc. Explain it in a meeting. Someone turns it into wireframes or copy. Meet again. Then build something so everyone can finally see what you meant.

That’s a relay race with too many handoffs.

Vibe-coding collapses it into one run. Idea to clickable prototype to feedback in the same sitting.

Fewer handoffs. Fewer miscommunications. Fewer sprints wasted building the wrong thing because everyone had a different mental picture.

Poor requirements are among the top reasons software projects fail, and misalignment between what's needed and what's built drives rework and delays. We've written before about why AI adoption stalls. Vibe-coding addresses that gap early, when changes are still cheap.

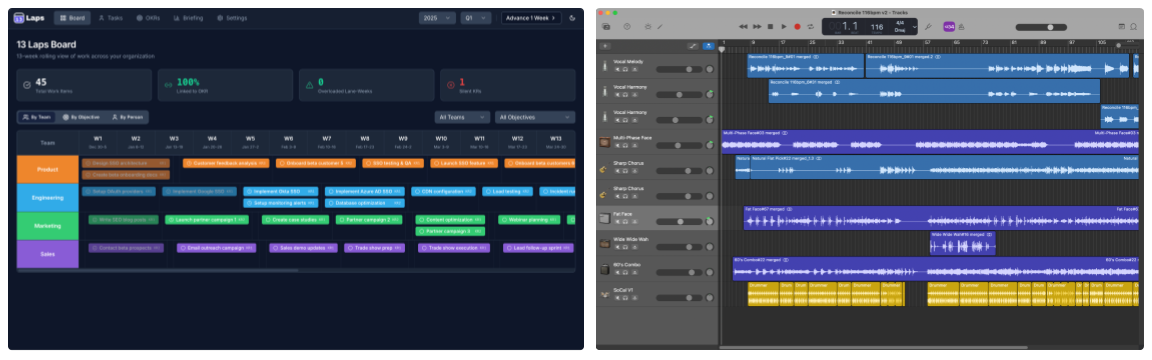

Back in the 90s, I used a Fostex 4-track to record demo songs on cassette tapes (see pic below). You’d lay down drums and bass first, then guitars, keys, vocals, etc. If you needed more than four tracks, you’d mix some down to free up space. Every time you did that, you’d lose a bit of quality.

It was limited and rough. But it got the song down so the band could practice their parts and make decisions.

You weren’t making the album. You were capturing enough structure so everyone could hear the same thing and figure out what worked and what didn’t.

Then you’d take that into a real studio and record it properly. That part was expensive and laborious, so you didn’t want to walk in there still arguing about the chorus.

The demo tape forced clarity before the expensive work started.

Vibe-coding (even GenAI) plays the same role for product work. The prototype is the demo, not the album.

And here’s what people miss: efficiency is a byproduct of effectiveness. If you build the wrong thing faster, you just waste time at a higher speed. 8x0 still equals 0.

Gerard recently built a DRIP calculator prototype.

He started with a short Product Design Doc (roles, basic flow, key screens). He used Manus to shape prompts for Bolt, pasted them in, and generated a working front-end prototype.

PDD to clickable prototype: 28 minutes.

Not production code. Not secure. Not deployable. But it had screens, navigation, inputs, and a flow you could walk through with a dev team.

That changes the conversation.

Instead of debating abstract requirements, you click through like a user would and the real questions surface:

You see where the flow breaks. You see what’s confusing. You see what you can cut without losing the core experience.

Gerard's 28-minute prototype helped the team clarify requirements before dev work began, avoiding the usual back-and-forth about what to build.

That’s the “demo tape effect”. You expose weak parts while changes are still cheap.

When Pro Tools came out, home recording got better but it was still too expensive. Hardware, interfaces, software required a budget most musicians didn’t have.

Apple’s GarageBand changed that (bottom right, one of my recent “demo songs”). Suddenly anyone could lay down ideas without dishing out loads of cash. It’s not Logic or Final Cut, but it’s good enough.

AI feels similar to me (top left, one of my prototype “demos” in Loveable). Tools like Claude, ChatGPT, Lovable, and Cursor let me build prototypes much quicker than before. Not production-quality (or well-written). Not secure. But good enough to test an idea and get feedback before committing real resources.

That shift from “you need professionals” to “you can try this yourself” changes who gets to prototype and how fast ideas move.

Vibe-coding is a fast way to get concrete.

It’s not a replacement for engineering. The prototype is disposable, like a demo in GarageBand. Useful, rough, temporary.

Your real product still needs code standards, reviews, testing, security, proper architecture.

Vibe-coding just helps you walk into that work with fewer assumptions and tighter decisions.

Think of it as the new whiteboarding. It shows everyone the same thing before you start building the real thing.

Looking for more ways to make AI adoption practical? See how we use AI to draft slide decks in minutes.

Again, it’s not perfect, but progress trumps perfection.

Pick one feature you’re planning to build next month. Use a vibe-coding tool to generate a clickable prototype this week. Spend 30 minutes walking your team through it.

Track what happens: How many requirements get clarified? How many assumptions get challenged? How many “oh, I thought you meant...” moments do you avoid?

That’s the value. Not the prototype itself. The alignment it creates.

Once you align on what to build, let your team build the actual product (the right product) without wasting a sprint on guesswork.

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

Does this sound familiar?

“Last month I deleted 100 marketing emails without reading them. This month our marketing team sent 300.”

Every B2B marketer is guilty of this (me included).

We build funnels, campaigns, and attribution models as if buyers care about our products as much as we do. But when we’re buying, suddenly things are the exact opposite.

We sell as if buyers care. We buy as if we don’t. Oh the irony (and hypocrisy).

That’s the gap where B2B GTM waste festers.

When B2B buyers are in-market, they have serious considerations that get riskier the more complex and pricey the solution gets:

Everything else (UX, brand, clever ads, slick design, roadmap, PDFs) sits at the edge of those questions. Still important, yes. But still secondary.

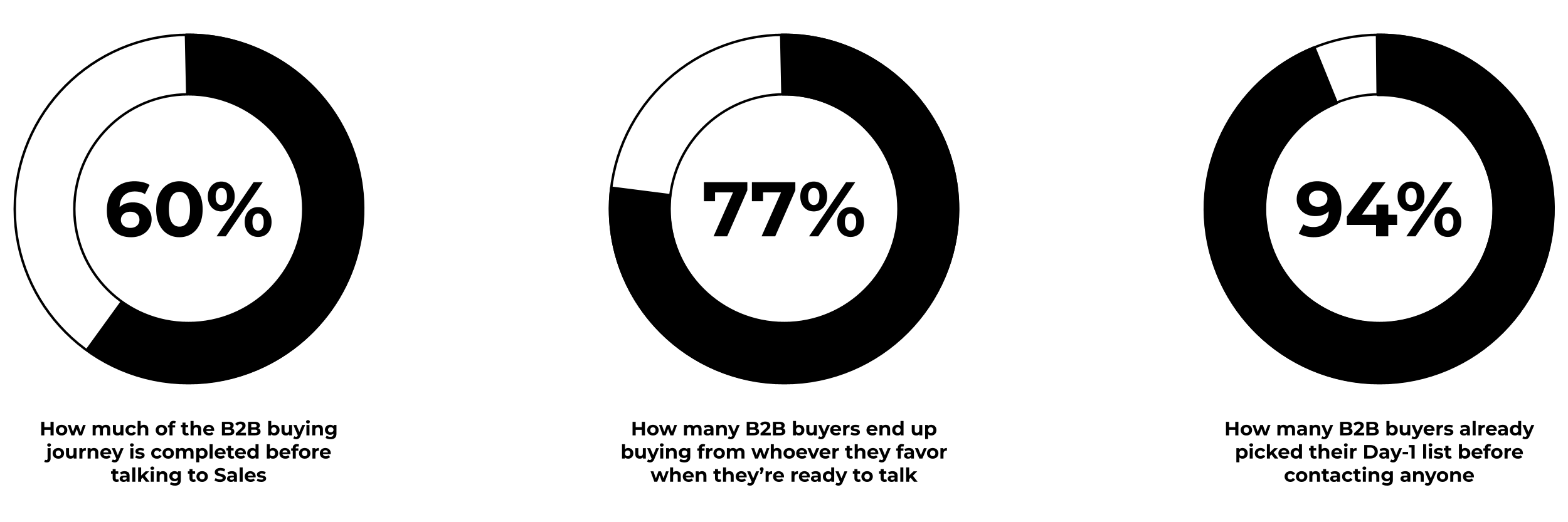

Here’s what we now know thanks to research from Ehrenberg-Bass:

The majority of buyers aren’t shopping when we think they are. They don’t care about our bright shiny new thing because they’re happy with the one they already have.

When they finally do care, 6sense’s 2025 Buyer Experience Report shows most of the journey is already done before they talk to sales. By that point, the favorite usually wins.

So the attention math looks something like this:

Inside your company, you have weekly GTM meetings and dashboard reviews. On the buyer side, you might get 45 minutes on a Thursday if you’re lucky enough to make their Day-1 list.

The crazy thing is that even with this reality staring us in the face, we still resist it. Like there HAS to be a workaround even though we would never take the bait ourselves when we’re in buying mode.

Here’s the empathy gap.

When we sell, we act as if:

When we buy, we:

When the shoe is on the other foot, marketers and sellers are no different. We’re the same impatient, overloaded buyers like every other buyer.

We know how little we care as buyers. But we insist on building GTM systems that assume buyers care a lot. They don’t. Neither do we.

(I’ve written before about this disconnect. It’s the elephant in every boardroom.)

Buyers are selfish because their job is to protect their time, their team, and their career. Marketing’s job is to make that easier, not harder.

So design for that reality.

We’re all buyers. We delete emails and screen calls. We bounce from sites that start with how great “we” are and how revolutionary “our” thing is.

Many of us block ads, browse anonymously, use fake email accounts, and spend less time reading marketing emails.

The people creating campaigns are the same people ignoring campaigns when they're buying.

The question GTM teams should be asking is: “If I wouldn’t engage with this as a buyer, why am I building it as a seller?”

Most buyers aren’t shopping today. When they are, we only get a sliver of their time. Inside that sliver, they care about their problem, their risk, and their time (not ours).

So be creative! Build something you’d actually engage with if the roles were reversed. Make it easy for them to say “Let’s see if this might actually help.”

Don’t know where to start?

Ask the buyer you already know best: You.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

Here is an effective way to use an AI tool like Manus.im. Pick one shared task everybody hates, like building slide decks. Use AI to get to an 80% draft in minutes. Spend the saved time on decisions and conversations, not formatting.

Over the past few months, we’ve written about why AI adoption frustrates managers and how leaders can remove the barriers.

This time we want to stay close to the ground.

The real question is no longer “What’s our AI strategy?” It’s “What is the smallest thing I can do right now that actually helps my team?”

Not that long ago, simple AI tasks were clunky (one could argue some still are).

You wrote a prompt, fixed the output, and still spent an hour or so cleaning it up.

That has changed.

Today, GenAI and tools like Manus can take you from a blank page to an acceptable first draft with no integrations and no scripts.

So instead of chasing a giant AI program across the whole company, start with one workflow everyone already knows and already dreads.

PowerPoint.

At some point, most of us have to create a slide deck. It’s repetitive work. It eats time. Most people do not enjoy doing it (or sitting through bullet-point hell).

Recently, Gerard has been running the following use case with his team.

1. Start with a single prompt inside Manus (you can grab the prompt here):

2. Then attach a document with the content and run the prompt.

3. In about five minutes, Manus spits out a fully structured deck with:

Is it perfect? No.

Is it good enough to work with? Yes. Roughly 80% of the way there in fact.

Now you have oodles of time left to focus on fine-tuning the idea inside the deck instead of the deck itself.

This is where expectations matter.

AI will not:

The quality of the presentation is still limited by your own tacit knowledge and the input you give it. If the document is vague or wrong, the deck will be vague or wrong.

What AI can do:

AI gets you to a rough but usable version fast. You still need your tacit knowledge (those finite judgment calls in your head that never make it into documentation) to edit, refine, and present it well.

That last 20% is where your expertise shows up.

AI does not do that part for you (nor should it).

When AI adoption stalls, a lot of times it’s because we start too big.

We talk about agents and platforms that most people will never touch. Or we tell everyone to “go use AI” for random tasks like email summaries, then walk away.

Real adoption looks different.

It starts with one visible, repeatable use case that:

A slide deck generator does that:

When we see something like, “Watch this: five minutes from doc to deck,” we don’t just hear about AI. We feel the benefit.

Skepticism turns into curiosity.

Curiosity turns into “Can I try that with my next presentation?”

And that’s the point.

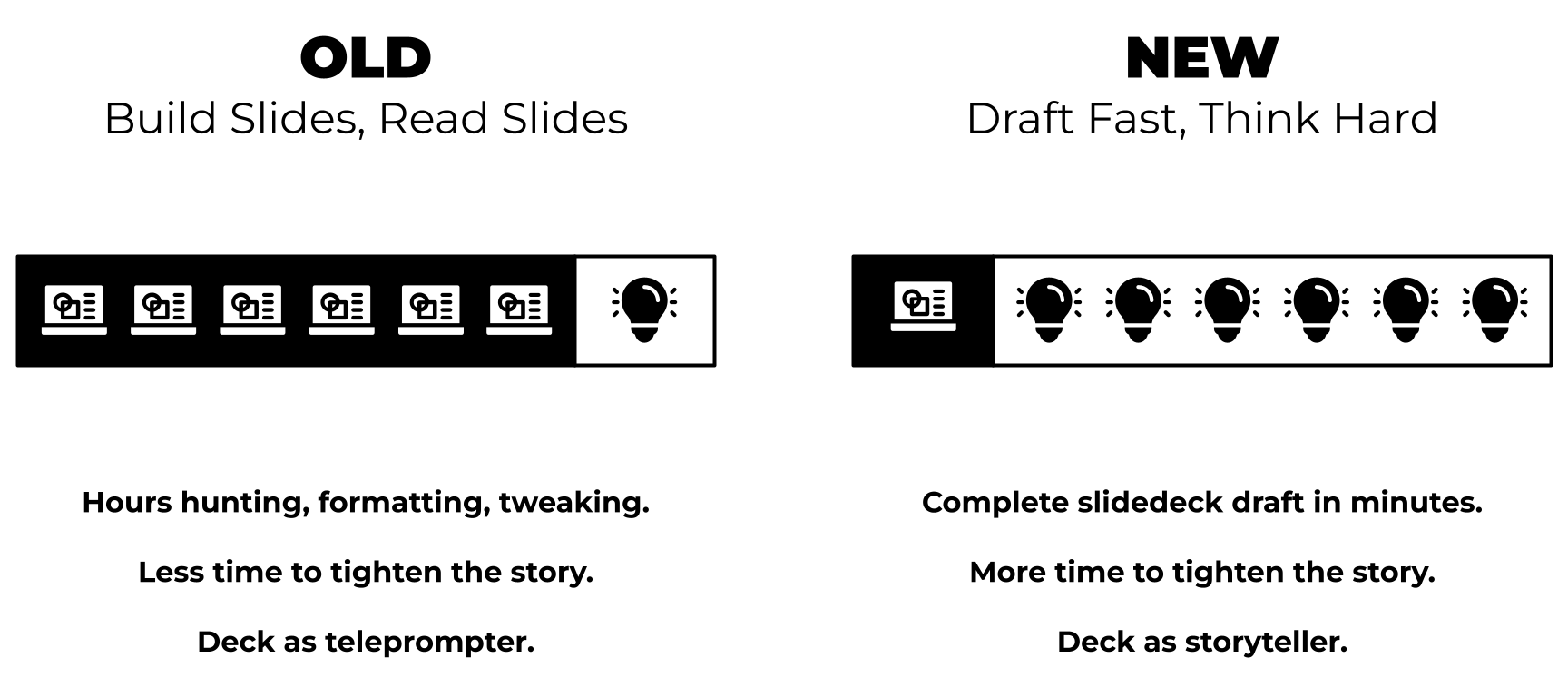

It’s easy to treat this only as a time-saver.

But the point of a deck is not to have slides that act as a teleprompter. The point is to communicate an idea clearly enough that your team can act on it.

If AI takes the build time from two hours down to five minutes, the real win is what you now do with those 115 minutes you just freed up:

AI makes you more efficient at building the artefact.

Use that space to become “better at” the communication.

You don’t need Manus specifically. Any solid AI tool works.

Here's the workflow:

Now you are not “playing with AI.”

You are changing how work gets done in a way your team can see and copy.

You’re helping your team become more efficient because they are more effective first.

We didn’t include the full prompt here because it is long and specifically tuned for Manus.

If you want to give it a try using Manus, grab the free Google Doc here.

Feel free to share it and make it your own.

Again, the point here is not “magic words”.

It’s the pattern:

That’s how real adoption happens—one visible workflow at a time.

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

After more than 25 years in B2B Tech Marketing, I’ve seen this movie too many times, and it keeps rerunning. So allow me to beat a dead horse.

A leader demands marketing prove ROI despite years with no GTM plan, no tracking except MQLs, just guesswork and spray-and-pray. Yet they want the receipts.

It’s crazy talk that sends marketing on wild goose chases instead of fixing the problem.

And it’s not a measurement problem. It’s a management problem.

Fixing it starts with real straight talk around operationalizing basic GTM tracking that should have happened from day one. Things like cleaning up contacts with zero context in the CRM.

Within a few months (yes, it takes time to build these systems), patterns emerge that couldn’t be seen before, such as buying group signals, channel performance, actual sales cycles.

But ROI? No.

There’s nothing to compare it to because marketing is building the measurement system while being asked to justify the investment.

It’s Groundhog Day all over again.

Neil deGrasse Tyson nailed why this keeps happening:

Neil’s advice?

“The scientific method is there to save you from yourself, to remind you that every conclusion must be tested, questioned, and refined.”

Neil deGrasse Tyson

Research from 6sense shows that 81% of B2B buyers choose their vendor before ever contacting sales. Yet most marketing teams are still measured on MQLs (Marketing Qualified Leads) that capture only 2-3 out of 10 buying group members.

Your marketing might be working brilliantly (or not). You just can’t see it.

Kerry Cunningham’s “MQL Industrial Complex” explains why: Only 3-5% of website visitors fill out forms.

The other 95-97% (including the CFO checking you out before signing off, the VP of Engineering evaluating your technical docs, the procurement lead comparing pricing) remains completely anonymous and invisible.

For the past 15-20 years, B2B tech leaders (including marketing) have operated on a fundamental misunderstanding about how marketing works and how it’s measured. Not because they’re incompetent, but because the measurement systems we all adopted from companies like Eloqua, Marketo, and HubSpot were built on flawed assumptions. (More on how this happened here.)

The truth is, marketing does not create revenue directly. It can only influence revenue by multiplying sales effectiveness.

One of the best thought leaders and data scientists on this topic is Dale W. Harrison, and his research demonstrates this.

When properly instrumented, marketing investment generates a 20:1 return, not by “driving” revenue, but by making sales 3x more efficient. Close rates improve from 4:1 to 12:1. Sales cycles shorten. Deal sizes increase.

But these effects compound over quarters (sometimes years), not weeks. 90% of revenue influenced by a quarter’s marketing activity won’t be recognized until 8-9 months later or longer, depending on how complex and pricey your tech is.

This is why your CFO can’t see it.

Because of time lag, you’re measuring in the wrong timeframe with the wrong metrics.

Short answer: Stop asking marketing to prove last quarter’s ROI, especially if you don’t have the systems in place to track and measure the YoY data needed to answer that question.

So ask better questions that reveal multiplier effects:

Pull your leads from the last 12 months (if you have the data!) and group them by company domain. You’ll typically see 1.5-2.5 leads per account.

Now ask:

Answers to those questions help you establish buying group signals that can predict pipeline better than individual lead scores.

Five people from a target account researching your solutions (even if no one fills out your forms) is a stronger signal than one person downloading a PDF.

As Neil deGrasse Tyson said, the scientific method requires a hypothesis. Most B2B leaders are demanding marketing prove ROI without ever stating what they believe should happen.

Treat marketing like capital. Its job is to cause more profitable closes by multiplying your Sales effectiveness. Fund programs that show tested lift and save money and time by cutting the rest.

B2B Marketing has never been predictable or linear. Buyers are irrational. Decisions involve politics, budget constraints, competing priorities. Multiple stakeholders rarely move in straight lines.

But that doesn’t mean we can’t measure marketing. It means we’ve been measuring the wrong things and looking in the wrong places.

The companies winning today aren’t the ones with the most MQLs. They’re the ones that show up consistently when buyers are researching, earning trust across multiple stakeholders, and making sales teams more effective.

Your marketing could already be doing this.

The question is whether you’ve built the systems to see it.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!