Positioning, Messaging, and Branding for B2B tech companies. Keep it simple. Keep it real.

Contrary to what martech tools like HubSpot say, marketing has never been predictable or linear. And it doesn’t drive revenue directly. It multiplies sales effectiveness, shortens the path to “yes,” and protects future growth. Here’s what every B2B tech leader should know.

Last week, HubSpot sent out this email:

The gist of it was to promote their new AI-powered features, as if AI will make marketing predictable like it once was.

“Marketing used to be predictable and linear. Not anymore.”

Really?

Um, Marketing was never predictable. Never linear. Not in B2B, not in B2C, not anywhere.

And framing it this way is exactly why companies waste time and money chasing shortcuts.

For the past 15–20 years, B2B marketing has been hooked on “performance marketing” because of promises made by software companies (HubSpot is not mutually exclusive here).

Marketers were sold a bill of goods: predictable funnels, lead-to-revenue models, and real-time multi-touch attribution displayed on pretty dashboards.

Hey, I’m just as guilty. I drank the Kool-Aid too.

But the results? Nothing to be excited about.

Research and data analysis have proven the opposite:

The idea that marketing once marched buyers in a straight line from awareness to deal? That's a fairy tale.

And the idea that AI will restore predictability? Wishful thinking.

Here’s the real distinction every executive should know:

Marketing creates the conditions for sales to succeed:

But it does not “drive” revenue on its own. Presenting it that way sets CMOs up against an impossible benchmark, one the CFO and board will never buy.

Dale W. Harrison explains this clearly in How Marketing Creates Revenue.

His model shows the incremental effects of sales, performance marketing, and brand marketing combined:

This proves that marketing is a non-linear multiplier of a linear function like sales. It produces zero direct revenue, but it radically amplifies sales.

PS: Dale’s The Mythology of Brand Growth is an insightful follow-up to Byron Sharp’s book, How Brands Grow (mentioned above). Definitely worth reading.

Mark Stouse and I have discussed this many times.

He reinforces this reality with two important points:

This is CFO-friendly language: delayed, compounding effects that expand efficiency and probability.

The “predictable funnel” story keeps getting pushed for a number of reasons.

This last point matters. When we stop questioning, we stop leading. And when we outsource our thinking to vendors, we reduce marketing to a support function instead of a growth function.

The messy reality? It’s inherent in the system.

But that’s okay! Because the real job of marketing is to:

This is how CEOs and CFOs should frame marketing internally: as a capital investment in future efficiency, not as a vending machine for leads.

If you lead a B2B tech company, here are three shifts worth making:

1. Stop asking for linear attribution.

2. Treat marketing outcomes as probabilistic not deterministic.

3. Listen to Peter Drucker: Reframe marketing as capital allocation, not cost.

And here’s another important point:

Marketing must raise the bar. We’ve grown complacent. Too often we accept whatever martech vendors tell us instead of doing the harder work of insight, strategy, positioning, and brand-building. Marketing can only reclaim its full power when we start asking tougher questions again.

Marketing is not predictable or linear. It never was.

It’s complex and messy.

Why? Because people are complex, irrational, and messy.

The power of marketing lies in its ability to compound over time, multiply sales, and shape the likelihood of revenue.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

B2B marketing and sales systems have been built around funnels, gated content, and MQLs. Yet that’s not how marketers or salespeople buy themselves. Research from Gartner, The CMO Survey, 6sense, and TrustRadius shows that buyers constantly move in and out of non-linear journeys. Most prefer self-serve information and make decisions based on brand familiarity. To fix the disconnect, B2B leaders need to rebalance short-term lead gen with long-term brand building, align metrics to real buying signals, and simplify the path to purchase... just like how they would buy!

Marketers don’t buy the way they market.

Salespeople don’t buy the way they sell.

And yet this is the system they keep building. Funnels. Gates. Attribution reports. MQL handoffs.

If we wouldn’t buy this way ourselves, why do we expect our customers to?

For the past 15-20 years, B2B Marketing has drifted from strategy to tactics.

The 4Ps still exist, but most teams only control one of them: Promotion.

Pricing lives with Finance. Place lives with Ops. Product lives with Product.

According to MarketingWeek, just over a quarter of marketers (25.8%) influence pricing, and only 7.2% play a role in distribution.

That vacuum gave SaaS a chance to redefine marketing as lead generation. “Demand gen” became another name for filling forms. Forrester and 6sense's Kerry Cunningham have both argued that MQLs don’t reflect how people actually buy.

Funnels and attribution models look pretty on a dashboard. But buyers don’t move in straight lines.

Research from Gartner shows the B2B journey is non-linear (hasn’t it always been?!?). Buyers have always looped, revisited, and often repeated steps out of order over weeks, months, and even years before creating their Day 1 list.

And in complex B2B deals, 77% of buyers say their latest purchase was “very complex or difficult”, with 6–10 decision makers involved and most research done before speaking to sales (AdvertisingWeek).

So while GTM teams celebrate form fills, most real buying happens elsewhere.

For CEOs: Wasted spend. Growth slows when funnels don’t reflect reality.

For CFOs: Inefficiency. Chasing MQLs inflates CAC and distorts ROI.

For CMOs: Frustration. Defending metrics everyone knows don’t map to revenue.

There is a way forward.

Brand + Buying Signals + Sales Alignment = Better Buying Experience

The good news is many seasoned B2B Marketing leaders are calling out the elephant and getting back to basics.

The mood is changing.

For more, dive deeper with the End Of MQLs series.

in a nutshell, Marketers don’t buy the way they market. Salespeople don’t buy the way they sell.

And just like the buyers they are marketing and selling to, they never have either!

It’s time to stop forcing buyers through systems we wouldn’t tolerate ourselves.

The best time to reset was yesterday.

The second-best time is now.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

By Gerard Pietrykiewicz and Achim Klor

Achim is a fractional CMO who helps B2B GTM teams with brand-building and AI adoption. Gerard is a seasoned project manager and executive coach helping teams deliver software that actually works.

AI adoption gets stalled by leadership gaps: confusing policies, employee fear, and leaders who say “go” but don’t show how. If this feels a bit like Groundhog Day, you’re not alone. We’ve seen similar adoption challenges with desktop publishing, the Internet and World Wide Web, and blockchain. The technology is ready, but organizations stumble on the people side. This article looks at what leaders can do right now to remove those barriers and make adoption a little less stressful.

Jim Collins, in How The Mighty Fall, describes how once-great companies decline: hubris born of success, denial of risk, and grasping for silver bullets instead of facing reality. AI adoption sits at a similar crossroads. Companies that wait and assume their past success buys them time risk sliding down a similar path.

Reset (5 min):

Decisions today (10 min):

Guardrails (10 min):

Metrics (5 min):

Employees avoid tools they don’t understand. If your AI usage rules look like a legal brief, adoption will stall. It's hard for any company to have a policy loose enough to allow for easy adoption and experimentation, yet restrictive enough to prevent critical data leakage.

Large corporations often have the budget, legal teams, and even their own data centers to set up AI policies and infrastructure. That gives them speed at scale.

Smaller companies are technically more nimble, but without sufficient resources, they often default to over-restriction, sometimes banning AI entirely out of fear of risk. That means lost productivity and missed learning opportunities.

Opportunity: Make policies visual, clear, and quick to navigate. The goal isn’t control. It’s confidence. Guidance like the NIST AI Risk Management Framework shows how clarity enables trustworthy, scalable use (NIST).

Employees fear what they don’t understand. And one-size-fits-all training doesn’t help.

When people see AI applied to their specific role (automating a report, simplifying customer emails), that fear turns into enthusiasm.

Pilot programs work. Early adopters can demonstrate real use cases, and their wins spread fast inside the org.

Opportunity: Treat those early adopters as internal champions. Prosci’s research shows “change agents” accelerate adoption (Prosci). Then turn those wins into short internal stories and customer-facing examples. That’s how adoption builds brand credibility, not just productivity.

When executives hesitate, teams hesitate. The reverse is also true: when leaders use AI themselves, adoption accelerates.

Research on organizational change is clear: active, visible sponsorship is a top success factor (Prosci). It signals that experimentation is safe and expected.

And there’s an external benefit too. Leaders who show their own AI use give customers and partners confidence. It’s a market signal.

Opportunity: Leaders can’t delegate this. They need to be participants, not just sponsors.

To make AI adoption successful, leaders must create an environment where experimentation feels safe and useful.

The parallels to earlier waves of tech adoption are uncanny: the ones who figured this out first didn’t just get more efficient. They were remembered as the ones who defined the category because they were more effective adopting the tech.

The risk of waiting isn’t just lost productivity. It’s losing the perception battle before you even start. Credible stories and visible leadership shape buying decisions and long-term trust (Edelman–LinkedIn).

Leaders: simplify, experiment, participate, and share your wins. Your teams and your customers will thank you.

If you like this co-authored content, here are some more ways we can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

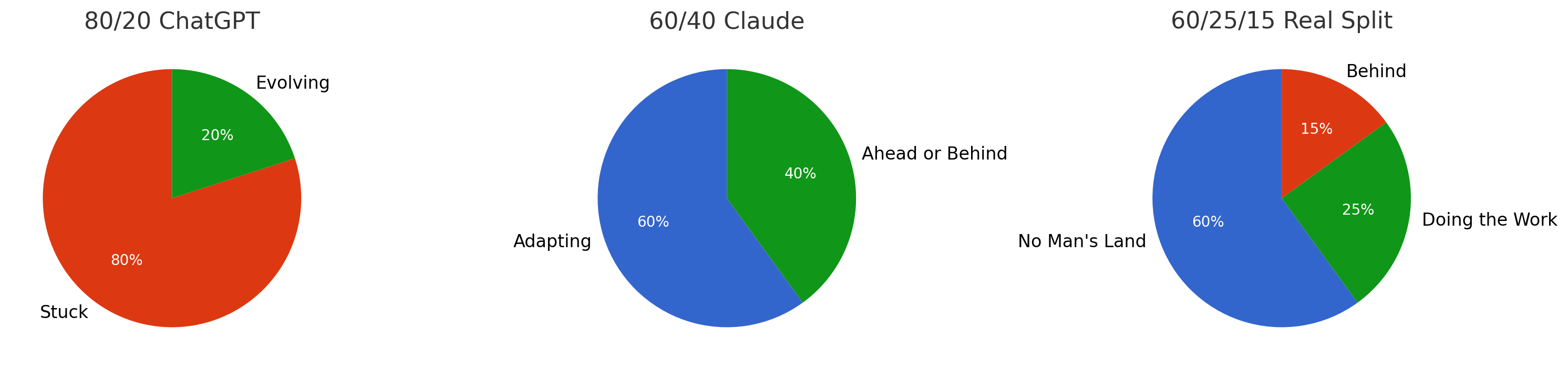

I read an interesting article on TechRadar by John-Anthony Disotto. He used the 80/20 rule to create a prompt that “makes learning skills incredibly easy.” It’s not mathematically deep or overly technical. So for fun, I gave it a go using B2B GTM. It worked, but not the way I expected.

The prompt is dead simple:

“Give me the 80/20 of X.”

Want to learn how to kickflip, cook sugo, or serve a tennis ball? Ask your favorite GenAI tool for the 80/20 and it will spit back a decent analysis based on Pareto’s famous principle.

That said, as with all “absolutes”, take it with a grain of salt and pull out the nuggets you need.

But it got me thinking:

Could this actually work for something as messy and misunderstood as B2B tech GTM?

I gave it a shot.

I first asked ChatGPT this prompt:

“What’s the 80/20 of B2B tech GTM in 2025?”

The answer came back fast using typical AI phrasing.

80% of teams are stuck in the past:

20% are evolving:

The gist wasn’t wrong, but a few things were headscratchers, like “Using Causal AI” and “Marketing drives strategy.”

It sounded too binary. Too neat.

So I then asked ChatGPT:

“Rigorously fact-check your 80/20 assessment for accuracy and credibility.”

The revised output was a bit more grounded.

20% of teams are winning because they:

80% are plateaued by:

OK, better. But I still wasn’t convinced.

Still sceptical, I sent the same prompt to Claude.

Claude came back with a softer read. It said it’s more like a 60/40 split. Most teams are adapting, but slowly and inconsistently.

Claude made some good points:

I went back and forth with Claude and ChatGPT. Both made valid calls and pointed out where they aligned and where they differed.

ChatGPT pushed back on Claude’s assessment:

“Claude’s response is solid—and mostly accurate. But Claude’s framing underplays the issues.”

After more back-and-forth, we landed somewhere in the middle.

60/25/15 is a more accurate split.

60% are stuck in no man’s land:

25% of B2B teams are doing the work:

15% are way behind:

Which one sounds like your Monday morning standup?

If you’re in the 60%, pick one:

Every modern B2B GTM team should be asking:

AI, brand, content, buyer insight—none of it works in isolation.

What separates the top 25% isn’t access. It’s consistency. They’ve operationalized what the rest are still experimenting with.

The “80/20” prompt worked, but not how I expected.

It won’t give you a perfect framework. That’s OK. It doesn’t need to be perfect.

A yardstick that validates what you already know and uncovers some new truths is more than good enough. Whether the actual number is 65.7% instead of 60% doesn’t really matter.

The point is, most GTM teams in 2025 know what to do. They’re just not doing it strategically or consistently. And they’re not proving it works.

That’s the gap.

The teams pulling ahead aren’t chasing leads or trends. They’re going back to the basics, putting insight and strategy ahead of tactics. And they’re doing it better, faster, and with accountability.

They treat brand as a signal, not decoration. AI as infrastructure, not novelty. GTM as shared responsibility, not departmental silos.

And they measure what matters, not what’s easy.

They do the hard part first.

Where does your GTM sit?

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

Primary Article Referenced:

Research Sources:

CMOs can make a big difference with CausalAI. It starts with what Mark Stouse calls a “quiet pilot.” Not a pitch. Not a deck. In Part 6 of The Causal CMO, Mark explains how GTM leaders can run skunkworks projects in the background without wasting months seeking buy-in or permission by using causal modeling. We used “deal velocity” as our project, but you can apply it to any core outcome: CAC, LTV, funnel integrity, partner yield, brand equity, even recruiting.

As we touched on in Part 4 and Part 5, the goal of a “quiet pilot” is meant to protect signal integrity. It’s not about secrecy.

If you announce you’re piloting CausalAI before you’ve proven anything, internal pressure will spike. Opinions will fly. Fear will kick in. And you’ll waste all your energy managing reactions instead of learning.

“There’s nothing unethical about doing a quiet pilot. In fact, it’s probably the most ethical way to test something that matters.”

You want to reduce friction, not accountability. To observe, adjust, and verify results before you invite others in.

Causal modeling isn’t magic. It’s just math applied to three distinct domains:

Most teams obsess over 1 and 2. But externalities drive 70–80% of performance. You’re not here to brute-force your way through them. You’re here to surf them.

“You can have the best mix in the world and still fail—if you ignore externalities.”

We used a B2B SaaS scenario:

Here’s how Mark broke it down:

Mark’s personal experience at Honeywell Aerospace demonstrates the effectiveness of running a quiet pilot:

“We improved deal velocity by almost 5%. That’s $11–12 billion of revenue moving faster into the company. The cash flow impact was extraordinary. The CFO became a fan.”

You don’t need perfection to start. You need clarity.

Start with a question like this one:

“Out of everything we’re doing, what’s really driving deal velocity?”

“Even synthetic models become templates for real ones later.”

Watch the forecast. Compare it to actuals. Adjust your mix. Then track again.

You’ll feel the change before you explain it. So will others.

“People will pass you in the hallway and say, ‘Something feels different.’ That’s your moment.”

You’re going to get humbled. So be prepared.

“You’ll realize most of what you’ve been tracking is noise. You’ll grieve. You’ll deny. You’ll get angry. Then you’ll change.”

It’s normal to go through disbelief, regret, frustration and even grief as you uncover how much of your GTM effort was based on correlation or gut feel.

But that’s the cost of clarity.

And the reward?

A system that actually tells you what’s working and how to make it better.

Not too soon. Let the model mature.

Here’s a rough timeline:

That’s when you gain credibility and the CEO and CFO lean in.

That’s when the board invites you to present.

That’s when peers start asking:

“If I gave you more budget, what could you do with it?”

Your story isn’t based on aspiration. It’s built on change.

Mark was very clear about the cross-functional application of CausalAI.

You can apply causal models across the entire business:

If your team owns outcomes, causal modeling can help you prove what drives them, even outside GTM.

If you’re a CMO, CRO, or GTM lead hoping to “earn your seat at the table,” this is how you do it. Not with big claims or flashy decks. With evidence.

“This isn’t a threat. It’s a lifeboat. Everything else is the risk.”

You don’t need better math. You need better questions and the courage to ask them before you sell the answer.

If you want to see what this looks like in practice, Mark has demo videos and 1:1 sessions available. Reach out to him directly on LinkedIn or email him at mark.stouse@proofanalytics.ai

Missed the LinkedIn Live session? Rewatch Part 6.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!

Marketing teams that obsess over MTA, MQLs, CTR/CPL are under more pressure than ever. BS detectors are on high alert in the boardroom. In Part 5 of The Causal CMO, Mark Stouse outlines what the C-suite already expects. GTM leaders need to be fluent in interpreting proof, spend, and business acumen.

As Mark and I already discussed, the rules changed in 2023.

The Delaware Chancery Court’s 2023 ruling expanded fiduciary duty from CEOs and boards to all corporate officers, including CMOs, CROs, CDAOs, and other GTM leaders.

That means we’re now individually accountable for risk oversight and due diligence. Not just our intent. Our judgment too.

“This is changing the definition of the way business decisions are evaluated… What did you do to test that? What did you do to identify risk and remediate the risk?”

Boardroom expectations have shifted. They want marketing accountability, not activity metrics. If your GTM budget is still defended with correlation math, you’re going to lose the room.

Causal AI gives you something different: Proof.

It tests what causes performance and why, how much it contributed, and what to do next.

It operates a lot like a GPS, recalculating your position in real time, suggesting alternate routes, and showing what could happen under different conditions.

Boards don’t want us to show them more dashboards. Think of it like the bridge of a ship. The Captain and First Officer

They want decision clarity:

Causal AI models cause and effect based on live conditions, not lagging indicators. It runs continuously. It adjusts to change. It simulates outcomes with real or synthetic data.

“GPS says, ‘I know where you are.’ You say where you want to go. It gives you a route. Then, if something changes—an accident, traffic—it reroutes. Causal AI works the same way.”

Mark shared a great story from one of his clients. During COVID, the finance team at Johnson Controls planned to cut marketing by 40%. But causal modeling showed how that would destroy revenue 1, 2, and 3 years out.

“The negative effects… were terrible. Awful. Like, profoundly wretched.”

Finance still made cuts, but only by 15%, not 40%. Because the data made the risk real.

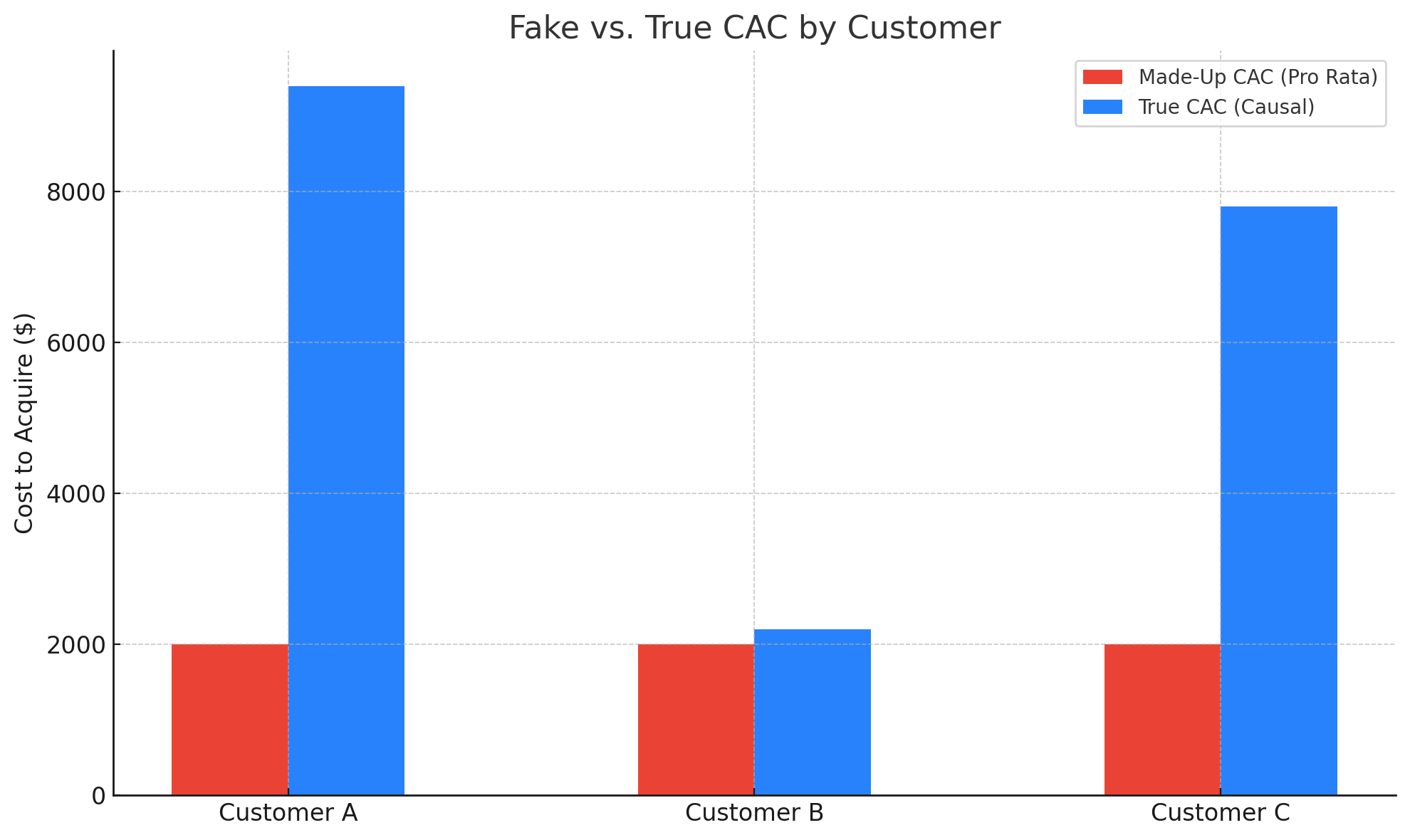

A lot of B2B companies still treat CAC and LTV as truth. Mark didn’t mince words:

“CAC is a pro rata of some larger number. That pro rata is not real.”

And LTV?

“In the vast majority of cases, it’s completely made up.”

The bigger issue: CAC isn’t just a cost. It’s a form of debt. If you spend $250K chasing an RFP and don’t keep the client long enough to pay that back, you’re in the red. Period.

This mindset shift matters most for CMOs trying to earn budget.

“You’ve got to understand unit economics of improvement… how much money it takes to drive real causality in the market. That’s true CAC. Not the BS a lot of teams have been selling.”

What does that look like?

The chart below is a simulated example of a typical flat MTA pro rata model compared to a variable causal model.

To be fair, it’s not always deception. It’s often desperation. Most teams are never given the tools to calculate real causality.

CMOs say they want a seat at the table. But most still operate like support teams.

If you want credibility in the boardroom, act like it’s your business and your money.

“I became very good at interpreting marketing into the language of whoever I was talking to, like HR, Legal, Finance, the CEO. No marketing jargon. Just business terms.”

Boards fund systems that scale. That means reframing GTM as a system, not a series of tactics. It’s a mindset that requires critical thinking and letting go of outdated playbooks.

“This is the difference between being seen as a business contributor and being a craft shop.”

Start learning finance. Take courses. Do sales training. Train your team. Speak the language of the business. That’s how you earn respect and influence decisions.

Part 4 covered how to start a skunkworks project:

In Part 5, Mark explains why the silence matters.

“You want to assemble your story of change. You won’t have that if you declare you’re doing this up front.”

The goal here is to earn sequential trust over time, not to be secretive. When people feel the improvement first, they’re far more likely to believe the explanation later.

“If they already believe it, they’ll accept the facts. If they hear the facts first, they’ll resist.”

So don’t lead with a deck. Don’t sell a vision. Build causal models behind the scenes. Learn what’s working. Adjust what’s not. Let the results speak. The key is to keep learning.

Then, when the timing’s right, you can confidently walk into the boardroom with a better story and the data to back it up.

The hardest part of this shift isn’t modeling. It’s having the guts to do the right thing instead of always doing things right.

“The biggest issue we all face is courage. The courage to act.”

Too many marketing leaders stay stuck because it’s safer. Even if nothing changes.

“If you’ve tried the old approach your whole career and it hasn’t worked… then you’ve got to change.”

And according to Mark, to be that change, you have to stop waiting for permission, stop hiding behind bad math, and start proving your worth quietly, confidently, and causally.

Navigating a business is kind of like flying a plane. Causal AI gives GTM teams the instruments they need to fly safely through volatility. It does more than “keep you in the air.” It helps you choose better paths when visibility disappears.

Implementing Causal AI into GTM requires a mindset shift. Marketing leaders will need to let go of legacy systems like MTA because the change is coming fast.

Here’s where to begin:

If you want a seat at the table, you need to earn it and prove it.

Missed the LinkedIn Live session? Rewatch Part 5.

If you like this content, here are some more ways I can help:

Cheers!

This article is AC-A and published on LinkedIn. Join the conversation!